Complete Guide to Manager Evaluation and Leadership Diagnostics

Take Management Styles Quiz

Start the TestWhat Modern Evaluations Measure and Why They Matter

Organizations thrive when decision-makers can see the strengths and blind spots of their leaders with clarity. Robust evaluations go far beyond generic personality quizzes; they connect behaviors to business outcomes, model risk under pressure, and reveal how individuals collaborate, influence, and execute. Effective diagnostics examine cognitive agility, emotional regulation, learning velocity, and practical judgment across varied contexts, producing insights that are timely, comparative, and actionable for real roles.

Leaders often validate their instincts through a management assessment test that blends psychometrics and simulation-based exercises. The best programs triangulate data from scenario work, structured interviews, and work samples, then convert findings into a development blueprint that aligns with strategic goals. When reliability and validity are established, the outputs inform high-stakes choices with confidence rather than guesswork.

For readers, the value shows up in three places: hiring accuracy, promotion readiness, and targeted upskilling. Hiring accuracy improves by filtering out false positives and surfacing overlooked talent with high potential. Promotion readiness gains consistency through calibrated standards and job-relevant criteria. Upskilling accelerates because the feedback ties directly to daily responsibilities and measurable behaviors, making coaching specific, fair, and motivating.

- Sharper role fit through evidence-based behavioral indicators.

- Faster ramp-up thanks to tailored development priorities.

- Reduced attrition by aligning capabilities with expectations.

- Better succession pipelines with transparent, comparative data.

Benefits, Outcomes, and Strategic Advantages

Executives use rigorous evaluation to de-risk leadership bets and unlock productivity. When diagnostic insights feed workforce planning, teams can anticipate skills gaps, staff mission-critical projects wisely, and set learning agendas that reflect where the market is heading. Granular feedback also energizes high performers, because it shows a clear path to bigger impact and provides a shared language for coaching conversations.

Decision-quality improves when a portfolio of management assessment tools supports hiring, succession, and targeted development. Data-informed calibration helps avoid political distortions, while comparative benchmarks reveal how leaders stack against internal cohorts and external standards. Over time, organizations that operationalize these practices ship projects faster, protect culture during change, and strengthen the managerial middle where execution lives and customer outcomes are won or lost.

Critically, strategic HR partners turn reports into repeatable rituals. Quarterly talent reviews, skill sprints, and leadership labs transform one-off assessments into a continuous system. That rhythm ensures feedback doesn’t collect dust, feeds into performance enablement, and ties to compensation only after growth opportunities are real and resourced.

- Clearer promotion criteria that employees perceive as fair and consistent.

- Focused investments in training with a measurable return on capability.

- Improved diversity outcomes through structured, bias-aware evaluation.

- Stronger engagement due to personalized growth roadmaps and coaching.

Methods, Formats, and Practical Comparison

Different situations call for different diagnostic formats. Early-career screening benefits from scalable tools with strong predictive validity, while senior roles warrant immersive simulations that mirror ambiguity, stakeholder conflict, and time pressure. A balanced approach mixes speed with depth: brief inventories for broad screening, and richer exercises for finalists or development cohorts.

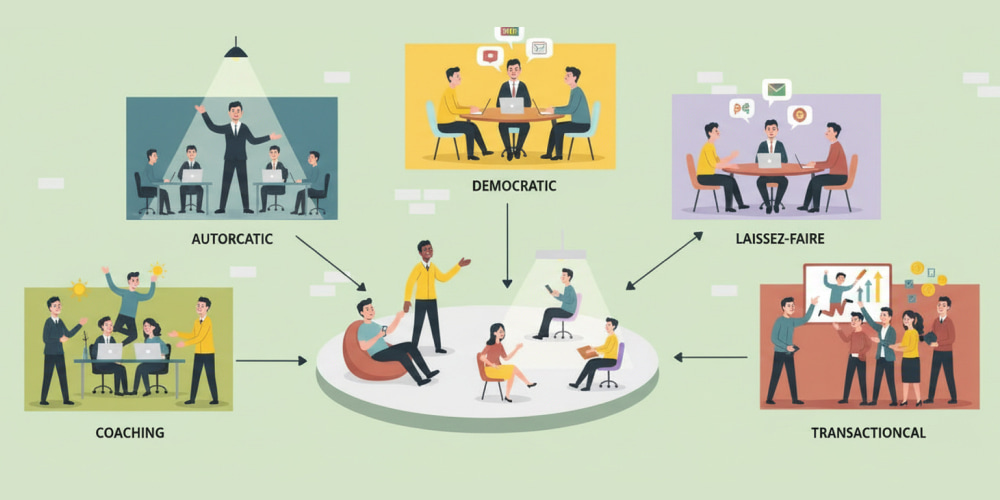

Supervisors gain specific insight from a management style assessment test because it anchors feedback in observable behaviors. Alongside that, structured interviews probe decision paths rather than outcomes alone, illuminating how leaders think when trade-offs collide. Work samples and in-basket exercises add realism, showing whether someone can prioritize, influence, and communicate under realistic constraints.

| Method | What It Reveals | Best Use Case | Time |

|---|---|---|---|

| Behavioral Simulation | Influence tactics, prioritization, resilience | Succession and senior roles | 2–4 hours |

| Structured Interview | Judgment, learning agility, pattern recognition | Mid-to-senior hiring | 60–90 minutes |

| Work Sample | Communication clarity, analytical rigor | Role-specific screening | 45–120 minutes |

| Short Inventory | Baseline tendencies and risk flags | Early filtering at scale | 10–20 minutes |

Budget-conscious teams can pilot a management style assessment free trial that demonstrates the scoring model in action. Pilots reveal whether competencies align with your framework, whether the reports are coachable, and whether candidate experience meets your employer brand. From there, blend quick filters with deeper exercises to get a complete picture without slowing hiring.

- Use brief screeners to narrow the funnel quickly and fairly.

- Add realistic tasks to test problem-solving in context.

- Calibrate with benchmarks to maintain consistent standards.

- Translate findings into concrete, role-specific action items.

Implementation Roadmap and Best Practices

Rolling out an evaluation program requires stakeholder alignment, a clear competency model, and thoughtful communication. Start by defining success profiles rooted in actual business priorities, not generic traits. Partner with hiring managers and coaches early so the behaviors you measure are the ones they will reinforce. Train interviewers, and set up feedback rituals so insights turn into growth.

Early-stage startups often choose a free management style assessment during hiring sprints to stretch limited budgets. Larger enterprises typically build a blended stack scalable inventories for volume, plus immersive simulations for senior roles then integrate results into HCM systems to track progress. Regardless of size, document your rubric, score anchors, and decision rules to keep the process defensible and repeatable.

Change management matters as much as tool selection. Socialize the purpose of evaluation as development-first, and separate growth feedback from compensation timing whenever possible. Offer opt-in coaching, share sample reports so participants know what to expect, and make sure leaders model the behavior by taking the same exercises and discussing their own plans openly.

- Define success profiles tied to strategy and outcomes.

- Pilot, measure candidate experience, and refine rubrics.

- Train assessors to reduce variance and bias.

- Close the loop with coaching, learning paths, and milestones.

Reading Results, Validity, and Ethical Use

Interpreting results correctly starts with understanding the instrument’s purpose and evidence base. Reliability tells you consistency; validity tells you that the tool measures what matters. Combine numerical scores with behaviorally anchored examples to avoid over-indexing on any single data point. Always cross-check with references and performance artifacts to build a fuller picture of capability and context.

Universities enhance career centers by promoting a management style self assessment for students that links campus projects to leadership strengths. In professional settings, managers can translate report insights into 90-day plans: identify one behavior to start, one to stop, and one to sustain, each anchored to measurable outcomes. Ethical use also means transparency, informed consent, and accessible feedback that respects participants and supports growth.

Finally, governance keeps the system fair. Periodically audit score distributions across demographic groups, refresh norms, and retire items that no longer predict success. Treat the tools as decision support, not decision replacement, and maintain human oversight for complex judgments where context determines the right call.

- Blend quantitative scores with qualitative evidence.

- Set clear thresholds, but allow expert review for edge cases.

- Monitor adverse impact and recalibrate as needed.

- Translate insights into concrete, time-bound development actions.

Faq

How long does a typical evaluation take?

Most end-to-end processes range from 45 minutes for an initial screener to several hours for role simulations, with the total time calibrated to seniority and decision criticality. Organizations often split components across days to reduce fatigue and improve data quality while maintaining candidate engagement.

What evidence should I look for in a quality instrument?

Seek documented reliability, criterion validity tied to real performance, and transparent scoring. Reputable providers share technical manuals, norm groups, and behaviorally anchored models that align with your competency framework and role expectations.

How do I ensure a positive candidate experience?

Communicate purpose, expectations, and timing upfront, provide accessible instructions, and return practical feedback where possible. Candidates value relevance and fairness, so mirror real work challenges, minimize redundancy, and offer support channels for questions.

Can small teams start without big budgets?

Educators seeking broader access may bundle a management style self assessment free resource with workshops to boost participation. Smaller firms can pilot low-cost screeners, validate outcomes on a small cohort, and scale components that prove predictive, practical, and well-received.

How should leaders act on the insights?

Turn findings into a focused plan with specific behaviors, practice reps, and success metrics. Pair participants with coaches or mentors, embed checkpoints into one-on-ones, and review progress quarterly to reinforce learning and sustain momentum over time.

Supercharge your leadership pipeline by making evaluation a repeatable habit, not a one-time event. When insights fuel daily decisions, teams execute with clarity, resilience, and measurable impact.